- One Cerebral

- Posts

- What AI looks like in 2025

What AI looks like in 2025

Co-Founder of OpenAI: John Schulman

Credit and Thanks:

Based on insights from Dwarkesh Patel.Today’s Podcast Host: Dwarkesh Patel

Title

Reasoning, RLHF, & Plan for 2027 AGI

Guest

John Schulman

Guest Credentials

John Schulman is a co-founder of OpenAI and a key architect of ChatGPT. He completed his Ph.D. in electrical engineering and computer sciences at UC Berkeley, where he initially focused on neuroscience before transitioning to robotics and AI under the guidance of Professor Pieter Abbeel. At OpenAI, Schulman led the reinforcement learning team that developed ChatGPT, contributing significantly to the field of artificial intelligence.

Podcast Duration

1:36:54

This Newsletter Read Time

Approx. 6 mins

Brief Summary

John Schulman, co-founder of OpenAI, discusses the evolution of AI models, particularly focusing on the distinctions between pre-training and post-training phases. He emphasizes the potential advancements in AI capabilities over the next few years, including the ability to handle more complex tasks and the importance of aligning AI behavior with human values.

Deep Dive

In a compelling discussion, John Schulman, co-founder of OpenAI, elaborates on the intricate processes of pre-training and post-training in AI development, emphasizing their distinct roles in shaping future capabilities. Pre-training involves training models on vast datasets from the internet, allowing them to generate content that mimics human language and behavior. Schulman explains that this phase is crucial for building a foundational understanding of language, but it is the post-training phase that refines these models into practical tools, such as chat assistants. He envisions that by 2025, advancements in AI will enable models to autonomously manage complex tasks, such as executing entire coding projects based on high-level instructions, rather than merely providing suggestions. This evolution will hinge on training models to handle longer, more intricate tasks, which Schulman believes is a significant area of untapped potential.

The conversation also touches on the challenges of teaching models to reason effectively. Schulman notes that while current models can perform well on a per-token basis, they struggle with maintaining coherence over extended interactions. He suggests that Long-Horizon Reinforcement Learning (LHRL) could unlock the ability for models to plan and execute tasks over longer periods, akin to human reasoning. However, he cautions that achieving human-level reasoning will require overcoming various bottlenecks, including the models' limitations in dealing with ambiguity and their reliance on structured tasks.

He notes, Reinforcement Learning from Human Feedback (RLHF) as a crucial component in enhancing the performance of AI models. He explains that RLHF allows models to maximize human approval by producing outputs that align with user preferences, effectively steering the model towards desired behaviors. Schulman notes that while current methods of RLHF are effective, they may need to evolve as models become more capable and are used in higher-stakes scenarios.

Reflecting on the development of ChatGPT, Schulman recounts the journey from initial instruction-following models to the conversational capabilities of ChatGPT. He highlights the importance of user feedback in refining the model's behavior, noting that early versions often struggled with hallucinations—misleading outputs that could confuse users. The iterative process of fine-tuning, which involved collecting diverse data and adjusting the model's responses, ultimately led to a more reliable and user-friendly assistant.

Schulman also shares insights into what makes a good reinforcement learning researcher. He emphasizes the need for a comprehensive understanding of the entire AI stack, from algorithms to data collection and annotation processes. Curiosity and a willingness to experiment are essential traits, as researchers must navigate the complexities of training models to achieve desired outcomes.

The discussion further explores the critical issue of keeping humans in the loop as AI systems become more capable. Schulman acknowledges the potential risks of fully autonomous AI systems, advocating for a balanced approach where human oversight remains integral to decision-making processes. He suggests that regulatory frameworks may be necessary to ensure that AI systems operate safely and ethically, particularly in high-stakes environments.

As for the state of AI research, Schulman addresses concerns about hitting a plateau in model performance. He argues that while there are challenges related to data limitations, the field is still evolving, and significant improvements can be made through better training methodologies. He believes that the complexity of post-training processes creates a competitive moat for organizations that can effectively navigate these challenges, as the knowledge and expertise required are not easily replicated.

Key Takeaways

There is a critical distinction between pre-training and post-training in AI model development.

Future AI models are expected to autonomously manage complex tasks, such as executing entire coding projects.

Reinforcement Learning from Human Feedback (RLHF) is essential for aligning AI outputs with human preferences.

The integration of multimodal data will enhance AI's ability to interact with various forms of information.

Ethical considerations and human oversight remain paramount as AI capabilities expand.

Actionable Insights

Invest in diverse datasets to improve AI model generalization across tasks.

Develop user interfaces that facilitate seamless interaction between humans and AI systems.

Implement ethical guidelines to ensure responsible AI deployment in high-stakes environments.

Encourage collaboration between AI developers and domain experts to enhance model training.

Monitor AI outputs continuously to identify and mitigate potential risks associated with advanced capabilities.

Why it’s Important

The insights shared in this conversation highlight the rapid advancements in AI technology and the implications for various industries. Understanding the nuances of pre-training and post-training processes is essential for organizations looking to leverage AI effectively. As AI systems become more capable, the need for ethical considerations and human oversight becomes increasingly critical. This knowledge equips stakeholders to navigate the complexities of AI deployment responsibly, ensuring alignment with societal values and needs.

What it Means for Thought Leaders

For thought leaders, the discussion underscores the importance of engaging in the ethical discourse surrounding AI development. As AI technologies evolve, leaders must advocate for frameworks that prioritize safety, transparency, and accountability. This conversation serves as a reminder that the future of AI is not just about technological advancement but also about ensuring that these systems serve humanity positively. By understanding the intricacies of AI training and deployment, thought leaders can better influence policy and practice in this rapidly changing landscape.

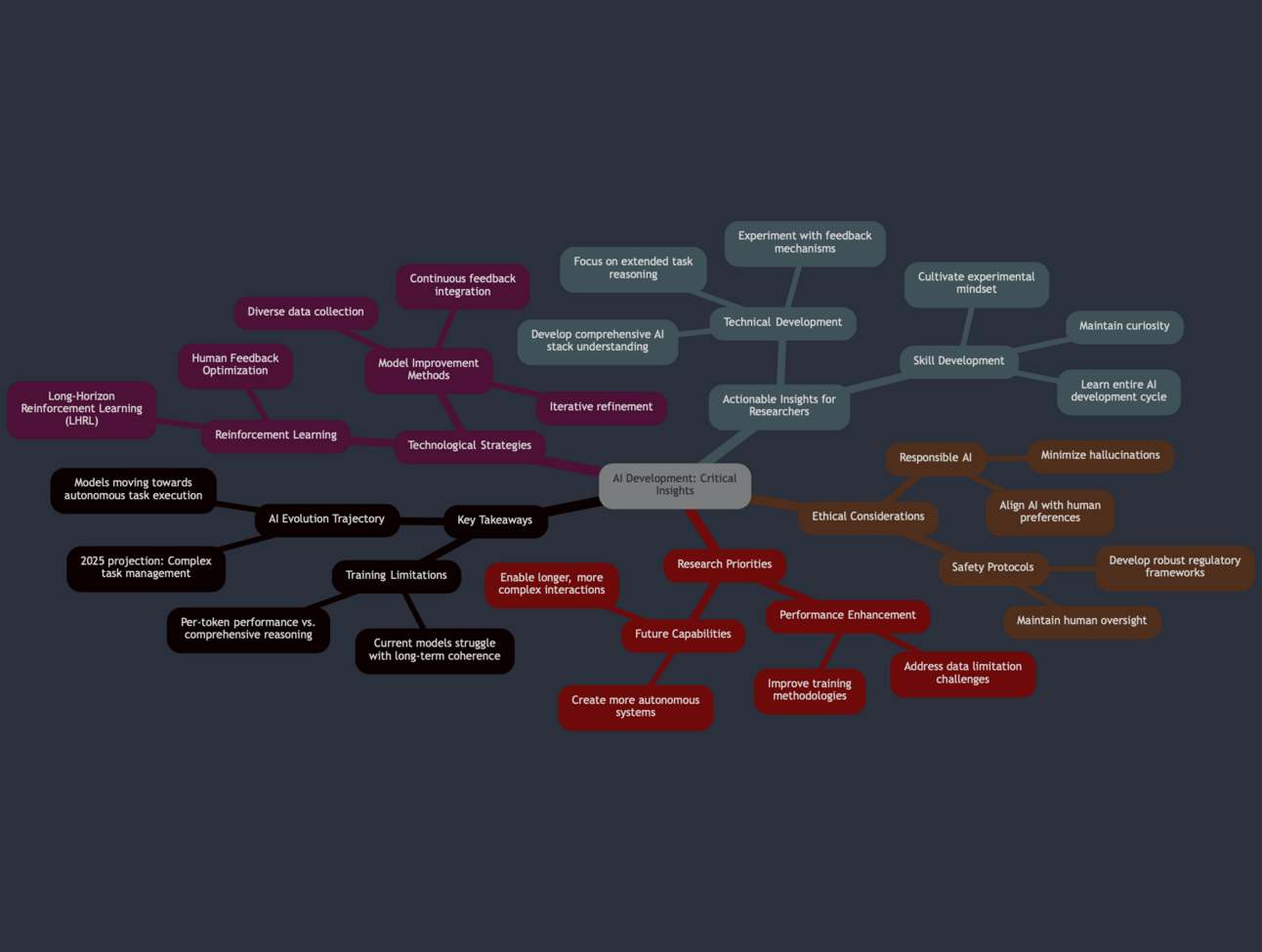

Mind Map

Key Quote

"If AGI came way sooner than expected we would definitely want to be careful about it. We might want to slow down a little bit on training and deployment until we're pretty sure we know we can deal with it safely."

Future Trends & Predictions

As AI continues to evolve, the integration of multimodal capabilities will likely lead to more sophisticated interactions between humans and machines. This trend aligns with current advancements in AI applications across various sectors, including healthcare, finance, and education. The push for ethical AI development will also gain momentum, prompting organizations to establish robust frameworks for responsible deployment. As these technologies become more embedded in daily life, the demand for transparency and accountability will shape the future landscape of AI.

Check out the podcast here:

Latest in AI

1. Andreessen Horowitz (a16z) has participated in xAI's recent $6 billion Series B funding round, alongside other high-profile investors such as Sequoia Capital and Fidelity Management and Research Company. This investment contributes to xAI's ambitious plans to bring its first products to market, including the Grok chatbot, and to accelerate research and development of future AI technologie. The funding round has propelled xAI to a valuation of $24 billion, significantly higher than initially projected, highlighting the strong investor confidence in Elon Musk's AI venture. A16z's involvement in xAI aligns with the venture capital firm's growing interest in artificial intelligence and its potential to reshape various industries.

2. 44% of Americans planned to utilize AI tools, such as ChatGPT, to find deals this Black Friday. This shift reflects a growing trend among shoppers who are increasingly turning to technology to streamline their holiday shopping experiences, moving away from traditional methods of searching for bargains. As consumers seek efficiency in navigating the plethora of discounts available, AI is becoming an essential ally in helping them make informed purchasing decisions.

3. OpenAI has launched an enterprise platform featuring o1-mini, specifically designed as a legal assistant capable of detecting even minor errors in legal documentation. The o1-mini model, which excels in STEM reasoning and is particularly strong in tasks requiring precision, offers a cost-effective solution for law firms and legal departments seeking advanced document review capabilities. By leveraging o1-mini's advanced reasoning abilities, this legal assistant can analyze complex legal paperwork with high accuracy, potentially reducing human error and improving overall document quality. The platform represents a significant advancement in AI-powered legal technology, providing a tool that can quickly and efficiently catch nuanced mistakes that might otherwise be overlooked by human reviewers.

Useful AI Tools

1. Quso.ai is an all-in-one social media AI tool that automates content creation, scheduling, and management, enabling users to enhance their online presence efficiently.

2. GenFM, now available on the ElevenReader app, allows users to transform any text, such as PDFs and articles, into engaging AI-generated podcasts featuring lifelike voices.

3. Maxim AI is an end-to-end platform that enhances AI product development and deployment by providing tools for evaluation, experimentation, and observability.

Startup World

1. Y Combinator's Demo Day is returning to an in-person format, starting with an Alumni Demo Day for the Fall 2024 class held at the end of the Thanksgiving weekend. This event featured pitches from 93 startups, generating excitement among attendees, including notable founders like Esther Crawford and Avni Patel Thompson, who highlighted the supportive atmosphere for current entrepreneurs. The main Demo Day is scheduled for December 4, where founders will present to an audience of approximately 1,500 investors and press. Following the pandemic's shift to virtual events, Y Combinator is emphasizing the value of in-person interactions, marking a significant return to its traditional format.

2. Brussels-based events tech startup AnyKrowd raised €4M in a Series A funding round led by an undisclosed investor. The company develops AI-powered solutions for crowd management at events, addressing a critical need in the events industry. AnyKrowd plans to use the funds to expand its operations and enhance its technology platform.

3. Media, services, and education company Bertelsmann has partnered with ElevenLabs to leverage AI for enhancing its storytelling capabilities. This collaboration aims to integrate ElevenLabs' advanced AI voice technology into Bertelsmann's content production processes. The partnership signifies a growing trend of traditional media companies embracing AI technologies to innovate their content creation strategies.

Analogy

Training an AI model is like preparing an athlete for the Olympics. Pre-training is the foundational phase, akin to building strength and endurance through general fitness routines. It gives the athlete the broad skills needed to perform. Post-training, however, is where specialization happens—like honing a sprinter’s technique to shave milliseconds off their time. John Schulman highlights that this refinement is what transforms AI into powerful, practical tools, just as targeted coaching turns potential into gold medals. The real challenge lies in teaching AI to think ahead, much like teaching a chess player to master not just moves, but strategies.

Thanks for reading, have a lovely day!

Jiten-One CerebralAll summaries are based on publicly available content from podcasts. One Cerebral provides complementary insights and encourages readers to support the original creators by engaging directly with their work; by listening, liking, commenting or subscribing.

Reply